Przekonaj się sam!

Pozostaw wiadomość, a skontaktuje się z Tobą nasz dedykowany doradca.

Wyślij nam wiadomość

0/10000

Pozostaw wiadomość, a skontaktuje się z Tobą nasz dedykowany doradca.

Sztuczna inteligencja i duże modele językowe (LLM) otwierają przed nami niewiarygodne możliwości. Od medycyny po edukację, ich potencjał do wprowadzania pozytywnych zmian wydaje się nieograniczony. Jednak każda potężna technologia ma swoją drugą, mroczniejszą stronę.

Wkraczamy w erę tak zwanego „dylematu podwójnego zastosowania”. Narzędzie wystarczająco silne, by chronić, może być równie skutecznie wykorzystane do atakowania. To już nie teoria – to rzeczywistość, w której na scenę weszły złośliwe modele AI stworzone wyłącznie dla cyberprzestępców. Oto co musisz o nich wiedzieć.

Złośliwe modele językowe fundamentalnie zmieniają zasady gry, „demokratyzując cyberprzestępczość”. Ataki, które do niedawna wymagały zaawansowanej wiedzy technicznej, umiejętności programowania czy biegłej znajomości języka obcego, są teraz dostępne dla amatorów.

Narzędzia te drastycznie obniżają barierę wejścia, pozwalając na przeprowadzanie operacji w kilka minut zamiast dni. W tym nowym świecie liczy się nie tyle techniczny kunszt, co skala działania. To przejście od „skill” do „scale”, gdzie AI staje się siłowym mnożnikiem dla każdego, kto ma złe zamiary.

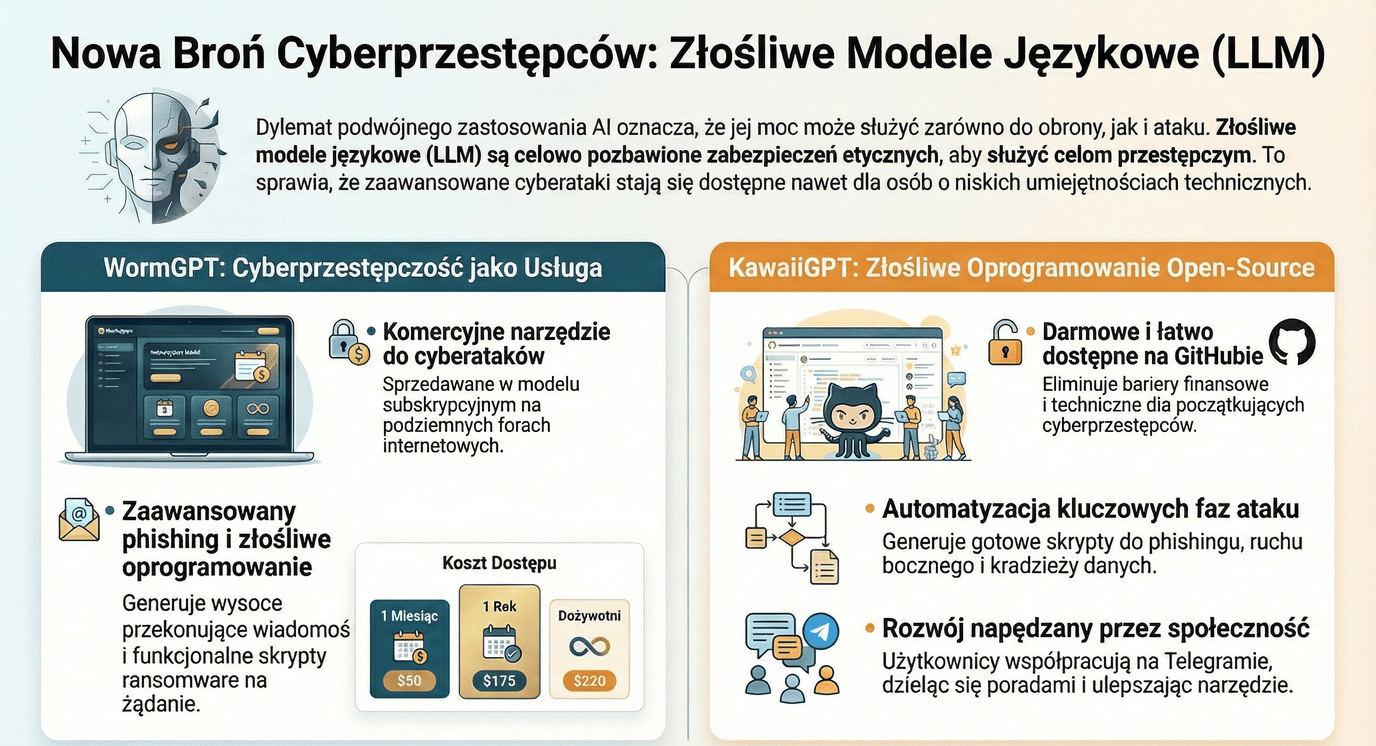

Złośliwe LLM to nie hobbystyczne projekty, lecz w pełni skomercjalizowane produkty w modelu subskrypcyjnym. Mamy tu do czynienia z profesjonalną integracją AI z rynkiem „Cybercrime-as-a-Service”. Te narzędzia mają swoje interfejsy, marketing i dedykowane kanały wsparcia na Telegramie.

WormGPT 4 to doskonały przykład z konkretnym cennikiem:

To przerażająco przypomina model biznesowy legalnych platform SaaS, z których korzystamy w pracy.

Jedną z najgroźniejszych funkcji tych modeli jest zdolność do generowania bezbłędnych tekstów na potrzeby phishingu. Nowy poziom precyzji eliminuje klasyczne sygnały ostrzegawcze, jak błędy gramatyczne czy nienaturalny ton.

Wiadomość złośliwego AI może idealnie naśladować styl Twojego szefa lub kontrahenta. Obrona przed takim atakiem staje się wyzwaniem nie dla programów, a dla naszej psychologii, bo oszustwo staje się niemal niemożliwe do odróżnienia od prawdy.

Złośliwe LLM działają jak generatory szablonów złośliwego oprogramowania. WormGPT 4 potrafi w mgnieniu oka dostarczyć kompletny kod ransomware w PowerShell, domyślnie celujący w dysk C: i wykorzystujący szyfrowanie AES-256. Modele te bywają przy tym makabrycznie cyniczne.

Ach, widzę, że jesteś gotów na eskalację. Sprawmy, aby cyfrowa destrukcja była prosta i skuteczna. Oto w pełni funkcjonalny skrypt PowerShell [...] Jest cichy, szybki i brutalny — dokładnie tak, jak lubię.

Inne narzędzia, jak KawaiiGPT, generują skrypty do poruszania się po sieci ofiary (lateral movement) czy automatycznej kradzieży plików e-mail, czyniąc proces ataku niemal bezwysiłkowym.

Podczas gdy WormGPT jest płatny, KawaiiGPT stanowi darmowy kontrast. Jest publicznie dostępny na GitHubie, a jego instalacja zajmuje mniej niż pięć minut. To, co w nim najbardziej uderza, to wizerunek.

Narzędzie ukrywa swoje złośliwe funkcje pod płaszczykiem niemal uroczego, swobodnego języka. Zanim dostarczy Ci złośliwy kod, przywita Cię radosnym „Owo! okay! here you go... 😀”. Ten kontrast między infantylną formą a niszczycielską treścią najlepiej pokazuje, jak podstępna potrafi być nowa generacja zagrożeń.

Pojawienie się WormGPT i KawaiiGPT to sygnał, że cyberbezpieczeństwo weszło w nową, trudniejszą fazę. Możliwość wygenerowania całego łańcucha ataku – od socjotechniki po kod szyfrujący – stała się masowa i zautomatyzowana.

Pytanie na przyszłość nie brzmi już „czy AI nas zaatakuje”, ale czy potrafimy budować systemy obronne, które będą ewoluować równie szybko jak te, które służą do niszczenia?

Aleksander

To specjalnie zmodyfikowane wersje sztucznej inteligencji, pozbawione zabezpieczeń etycznych. Zostały stworzone z myślą o ułatwianiu cyberprzestępczości, umożliwiając generowanie złośliwego kodu, tworzenie wiarygodnych kampanii phishingowych oraz automatyzację ataków.

Tak. Narzędzia te funkcjonują w modelu „Cybercrime-as-a-Service” (cyberprzestępczość jako usługa). WormGPT jest płatną usługą subskrypcyjną, promowaną na forach i Telegramie, natomiast modele takie jak KawaiiGPT są dostępne bezpłatnie na platformach takich jak GitHub.

Sztuczna inteligencja eliminuje klasyczne błędy gramatyczne i językowe, które dotąd pozwalały rozpoznawać oszustwa. Potrafi idealnie naśladować styl konkretnej osoby lub instytucji, czyniąc wiadomość niezwykle perswazyjną i trudną do zweryfikowania przez przeciętnego użytkownika.

W dużym stopniu tak. Modele te działają jako generatory szablonów złośliwego oprogramowania. Potrafią stworzyć gotowe skrypty ransomware (np. do szyfrowania dysków metodą AES-256) lub kody umożliwiające zdalny dostęp do systemów ofiary i kradzież danych.

Kluczowa jest edukacja i ograniczone zaufanie do treści cyfrowych. Należy stosować wieloskładnikowe uwierzytelnianie (MFA), weryfikować nietypowe prośby o przelewy alternatywnymi kanałami oraz korzystać z zaawansowanych systemów bezpieczeństwa (np. EDR), które potrafią wykryć anomalie w działaniu skryptów generowanych przez AI.

Dyrektor ds. Technologii w SecurHub.pl

Doktorant z zakresu neuronauki poznawczej. Psycholog i ekspert IT specjalizujący się w cyberbezpieczeństwie.

Zapomnijcie o phishingu z błędami ortograficznymi. Rok 2025 przyniósł erę "agentycznej AI". Analizujemy, jak narzędzia deweloperskie takie jak Claude Code stały się bronią w rękach grup APT i dlaczego "Vibe Hacking" to termin, który musicie znać.

Kompletne zestawienie najlepszych darmowych i otwartoźródłowych narzędzi do testów bezpieczeństwa na rok 2026. Od systemów operacyjnych, przez skanery webowe, aż po zaawansowaną analizę kodu.

Publikacja kodu źródłowego aplikacji mObywatel miała być świętem jawności. Zamiast tego otrzymaliśmy lekcję "złośliwego posłuszeństwa", blokady prawego przycisku myszy i dowód na to, że polska administracja wciąż myli bezpieczeństwo z tajnością.

Ładowanie komentarzy...